Multipath interference is one of the biggest challenges faced in time of flight cameras. In this article, learn what multipath interference means, and the 3 methods you can use to minimize it.

Prabu Kumar | e-con Systems

Time-of-Flight (ToF) cameras are powerful embedded vision solutions that provide real-time depth measurement – predominantly for applications that require autonomous and guided navigation. As you may know, ToF sensors use the light illumination technique where the depth is measured based on the time it takes for the emitted light to come back from the target object. (To learn more about how the technology works, please visit What is a Time-of-Flight sensor? What are the key components of a Time-of-Flight camera?). In order to achieve this, the entire scene must be illuminated before the differences between the light and the reflection are measured. However, these cameras have a stumbling block towards depth measurement called multipath interference (or multipath reflection) to overcome. In this blog, let’s look at what multipath interference means and how you can overcome it to ensure high performance of your Time of flight camera.

What is multipath interference? Why is it important in Time-of-Flight cameras?

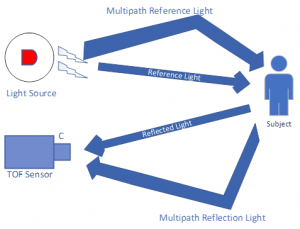

ToF sensors operate based on the theory that every individual pixel follows a single optical path. However, in a practical scenario, multiple illumination paths (from the light source) tend to get mapped to the same pixels. This is known as multipath interference. And this can prove to be extremely detrimental to ToF cameras as it could lead to drastic measurement inaccuracies. To put things in a nutshell, the light gets reflected from the target object and other nearby objects before reaching the camera sensor. It causes multiple paths of illumination – affecting the depth estimation.

The below figure illustrates how multipath interference occurs:

Figure 1 – Multipath reflection

The four instances where multipath interference could happen are:

- Specular interreflections in the scene – this typically happens when there is an opaque object obstructing the path of a light beam back to the sensor.

- Translucent objects – this is caused by the presence of a translucent object or surface in the scene.

- Multi-surface reflections (or diffuse interreflections) – multi-surface reflections are caused when there are multiple obstructions in the light’s path. This phenomenon is more likely to happen when the objects are placed in multiple 2D or 3D planes. This could also happen when the texture of the target object is uneven.

- Ghosting or lens flare – ghosting is the phenomenon of the light bouncing off different lens elements – or even the sensor – when the light from a natural or artificial source directly falls on them.

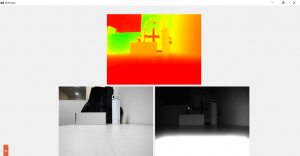

Let us now try to understand the concept using a practical example. Consider a ToF camera placed on a table as shown in the figure below:

Figure 2 – Multipath reflection caused by a camera placed on a table (image is from the point of view of the camera)

When the TOF camera is placed on the table as shown in the above figure, owing to the reflection from the table surface in the light’s path, the depth map is completely affected. As you can see, even a far object like the wall in the scene is shown as red (red indicates that the object is nearer relative to the other objects in the scene). Since, in these scenarios, several optical paths are juxtaposed at a single pixel, the measurement of depth gets significantly affected. Industry experts also opine that this is one of the leading causes of errors in even the most advanced ToF sensors.

3 ways to minimize multipath interference

Multipath interference is a challenging problem to solve since it involves the combination of multiple light paths. It is close to impossible to completely mitigate it. However, there are ways in which this can be minimized to ensure maximum accuracy of a time of flight camera. Let us look at these methods in detail in this section. This involves setting up the target scene in such a way that interference is minimized. Following are the steps you can take to do this while setting up the camera.

- Before you finalize the position, make sure that any bright objects in the vicinity are removed. If unavoidable, for example – a bright fixed surface, you can use a suitable tripod to place the camera at an elevated level. Please have a look at the below image to understand the difference this can make:

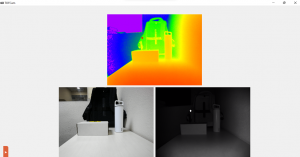

Figure 3 – Multipath interference minimized with camera placed on a tripod (image is from the point of view of the camera)

In the above setup, the camera is placed on a tripod. Here, in the depth map, we can see different color shades indicating different depths in the same scene.

- Ensure the camera is not placed near any translucent objects or in the corner. This helps to avoid deviations of the light path on the way.

- By choosing a lens specifically designed for ToF sensors with the coatings and filters that will help to reduce multipath interference caused by lens flare.

Is e-con Systems developing a Time-of-Flight camera?

References

- Multipath Interference in Indirect Time-of-Flight Depth Sensors

- What is a Time-of-Flight sensor? What are the key components of a Time-of-Flight camera?

This content was originally published on e-con Systems’ website. It is republished here with the permission of e-con Systems.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product