While the robots use sensors and algorithms to safely navigate even dynamic environments, they aren’t able to apply this sensory input for advanced decision-making.

Artificial Intelligence Drives Advances in Collaborative Mobile Robots

Artificial Intelligence Drives Advances in Collaborative Mobile Robots

Article from | MiR - Mobile Industrial Robots Inc.

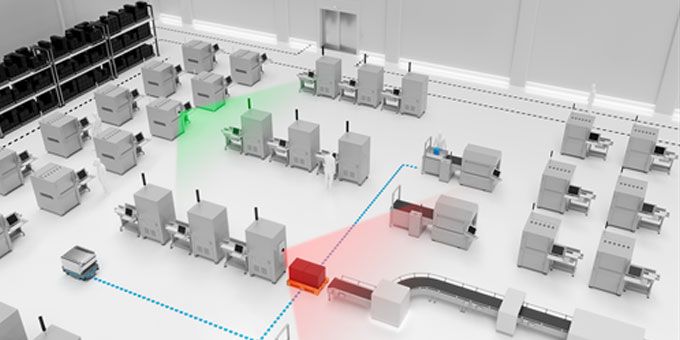

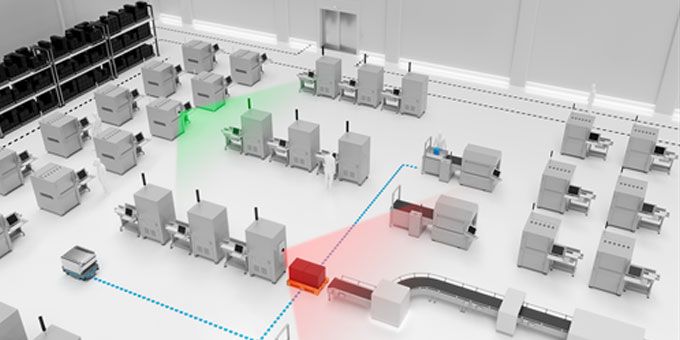

AUTONOMOUS MOBILE ROBOTS (AMRs) work collaboratively with people to create highly productive work environments by automating repetitive and injury-prone material transportation. While the robots use sensors and algorithms to safely navigate even dynamic environments, they aren’t able to apply this sensory input for advanced decision-making. The next step in the evolution of AMRs is the addition of artificial intelligence (AI) to increase the capabilities of smart mobile robots. AI will make these robots even more efficient, increase the range of tasks the robots can perform, and reduce the need for work-environment adaptations to accommodate them.

The Transition to Artificial Intelligence

Today, mobile robots use sensors and software for control (to define where and how the robot should move) and perception (to allow the robot to understand and react to its surroundings). Data comes from integrated laser scanners, 3D cameras, accelerometers, gyroscopes, wheel encoders, and more to produce the most efficient decisions for each situation. These technologies give AMRs many of the capabilities that have become familiar and desirable in today’s automobiles. The robots are able to dynamically navigate using the most efficient routes, have environmental awareness so they can avoid obstacles or people in their path, and can automatically charge when needed. Without AI, however, the robots react the same way to all obstacles, slowing and attempting to navigate around the person or object if possible, or stopping or backing up if there is no safe way to maneuver around it. The AMR’s standard approach is appropriate in nearly every situation, but in the same way that AI is powering new capabilities for self-driving cars and intelligent drones, it is also poised to dramatically change robotics.

.png)

Artificial Intelligence includes several branches. The AI that is used in autonomous mobile robots at this stage is focused on machine learning and vision systems.

.png)

The strategically placed MiR AI Camera static cameras enable the MiR robots to foresee obstacles on their routes, so they are able to re-route beforehand for optimized navigation.

AI for collaborative robots is focused primarily on machine learning (ML) and vision systems today, which are dramatically extending earlier sensor-based capabilities. Technology advances and market maturity in several key areas are enabling these innovations:

-

A wide array of small, low-cost, and power-efficient sensors allow mobile and remote devices to capture and transmit huge amounts of data about the robot’s immediate, extended, and anticipated environment as well as internal conditions.

-

Cloud computing and broadband wireless communications allow the data to be stored, processed, and accessed almost instantly, from any access point. Secure virtual networks can adapt to dynamic requirements and nearly eliminate downtime and bottlenecks.

-

Powerful new AI-focused processor architectures are widely available from both traditional semiconductor companies such as AMD, Intel, NVIDIA, and Qualcomm as well as new players in the field, including Google and Microsoft. While traditional broad-use semiconductors are facing the limits of Moore’s Law, these new chips are purpose-designed for AI calculations, which is driving up capabilities while driving down costs. Low-power, cost-effective AI processors can be incorporated into even small mobile or remote devices, allowing onsite computation for fast, efficient decisions.

-

Sophisticated software algorithms analyze and process data in the most productive locations—in the robot, in the cloud, or even in remote, extended sensors that provide additional intelligence data for the robot to anticipate needs and proactively adapt its behavior.

Using these capabilities, fleets of robots behave like a group of students taking online classes. They learn while they are online, and then are able to perform without constant access to online content. Low-power, AI-capable devices and efficientAI techniques support new robotic systems with low latency and fast reaction times, high autonomy, and low power consumption—all key elements for success.

Artificial Intelligence in AMRs Improves Path Planning and Environmental Interaction

Mobile Industrial Robots (MiR) is driving advances in AI in mobile robots and establishing new industry expectations. Innovative AI capabilities maintain the robots’ safety protocols and drive improved efficiency in path planning and environmental interaction.

AI is implemented in advanced learning algorithms in the robot’s software as well as in remote, connected cameras that can be mounted in high-traffic areas or in the paths of fork trucks or other automated vehicles. The cameras are equipped with small, efficient embedded computers that can process anonymized data and run sophisticated analysis software to identify whether objects in the area are humans, fixed obstacles, or other types of mobile devices, such as AGVs. The cameras then feed this information to the robot, extending the robot’s understanding of its surroundings so it can adapt its behavior appropriately, even before it enters an area. The AI-capable network helps the robot avoid high-traffic areas during specific times, such as when goods are regularly delivered and transferred by fork truck, or when large crowds of workers are present, such as during breaks or shift changes.

With AI, MiR robots can efficiently and appropriately react to different types of obstacles to improve navigation and efficiency. As an example, if they face an AMR from another manufacturer, they can predict that vehicle’s motion and adjust appropriately. When encountering a new, unknown object, the robot can be cautious and observant, collecting data so that it can calculate the best possible behavior for future interactions.

Similarly, in complex, highly dynamic environments—such as those with automated guided vehicles (AGVs) that can’t deviate from their fixed path or with human-driven fork trucks or other unpredictable vehicles—the robot’s ability to maneuver may be limited. AGVs’ safety mechanisms are typically limited to forced stops when encountering obstacles, for instance, which can block the AMR. AI-powered MiR AMRs can identify the AGV and be aware of its limitations, so that instead of stopping and requiring operator intervention to reroute it, they can automatically wait in a safe position until the AGV has passed, resuming their mission when it is safe to do so.

While the robots’ built-in safety mechanisms will always stop the robot from colliding with an object, person, or vehicle in its path, other vehicles, such as human-driven fork trucks, may not have those capabilities, leaving the risk of one of them running into the robot. A MiR robot system can detect high-traffic areas before it arrives and can identify other vehicles and behave appropriately to decrease the risk of collision.In this way, not only are AMRs improving their own behavior with AI, but are also adapting to other vehicles’ limitations.

.jpg)

Left: MiR AI Camera detects an object it was configured to react to, which prevents MiR robots from crossing the zone marked in red.Right: MiR camera detects a person and is configured not to react in this case, since the person is capable of efficiently avoiding the robot. The zone marked in green can be crossed by MiR robots in this case.

Addressing AI Concerns

AI is still new enough to raise concerns around security and privacy of the data being captured and used, as well as ensuring safety if AI systems fail or are interfered with. Key questions for customers as they consider AI-powered systems include:

-

Where and how is data stored, secured, and processed?

-

How long is data stored?

-

What personally identifiable information is captured?

-

What back-up safety mechanisms are in place?

To protect security and privacy, MiR robots and AI cameras only send decisions, not images. Since all the required computational power exists inside each device, data captured by vision sensors is processed right away into shapes, sizes, and colors, then classified into specific categories and used for decision-making. Therefore, the only kind of data that can be sent are commands that AMRs can understand, such as stop or resume; information on changes to the environment, such as a blocked path or a crowded area; or new actions to be taken, such as the choice of an alternative route.

While safety in robotic AI systems is sometimes raised as a concern, MiR’s primary safety mechanisms are core to the robot and cannot be overridden by AI decisions. For instance, while laser scanners on the robot provide data for AI systems, they also make fundamental safety decisions to mechanically stop MiR robots from proceeding under any circumstances if there are people or obstacles in the robot’s path.

The Future of Robotic AI

Mobile robots will continue to be collaborative entities, and with AI, the technological barrier between them and humans will continue to shrink, increasing collaboration and efficiency.

As AI advances, we will gain the ability to interact with robots more naturally using speech or gestures. That might include holding up a hand to make the robot stop, pointing it to a preferred direction, or waving it on to pass or follow—or simply telling it, “This hallway will be blocked for the next two hours, take another route until then.”

While mobile robots will still be a controllable tool with emergency stop buttons, they will gain autonomy that will make them even more valuable. They will be able to understand where their routine can be improved and suggest better paths to their destination, more productive times of the day to execute tasks, other robots that could be deployed for more efficient workflows, and the most appropriate time to recharge.

AI-powered AMRS will help turn workplaces into organic, data-driven environments, in which robots share relevant data from their own or remote sensors to help fleets of robots make informed decisions. With this data-sharing model, each robot essentially has access to every sensor in every other robot or camera, providing it with a much more detailed view of its entire environment, thus enabling much more efficient path-planning performance.

AI: Powering the Next Industrial Revolution

As in previous industrial revolutions, AI and robotics will make many laborious and undesirable tasks obsolete. With AI, MiR robots can manage repetitive, low-value material-transport tasks even more efficiently and cost-effectively, freeing humans for more meaningful activities. And with severe labor shortages holding back companies around the world, smarter mobile robots will allow companies to offer rewarding roles that help them attract and retain valued employees.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product

.png)