Borrowed from the military, Communications, Command and Control (sometimes called 3C), are the three key organizing principles for acquiring, processing and disseminating information

Distributed Control Systems Simplify the Three C's of Robotics

Warren Miller | Mouser Electronics

You may be familiar with Asimov’s three laws of robotics, made famous in his many science fiction stories. However, you may not realize that there are three other organizing principles (perhaps not strong enough to be called laws) – the three C’s of robotics, that actually do play a major part in current robotics designs.

Borrowed from the military, Communications, Command and Control (sometimes called 3C), are the three key organizing principles for acquiring, processing and disseminating information across distributed “force elements.” Today’s robotics implementations can also be considered a collection of distributed force elements – primarily relying on mechanical force however – and these three “C’s” can be applied to the design of robotic distributed systems as well.

Communications

Communications is probably the easiest element to understand when looking at the design of a distributed system. The multiple elements used for imaging, positioning, environmental sensing, power, and motor control (just to name a few) all need to communicate with each other and with a centralized controller that manages and coordinates the detailed activities to accomplish a task. Standard communications interfaces, either wired or wireless, are used to transfer sensing information from the edges of the system to the central controller. When the central controller needs to send instructions to the edge elements, perhaps to request a sensor update or advance a stepper motor, the same interface is used. Microcontrollers (MCUs) are usually the intelligence within the end nodes and they support a variety of communications interfaces to simplify data transfer.

Often it is convenient to minimize the data traffic from the edge to the central controller, and thus additional processing power is often moved to these edge nodes. This allows some functions to be done locally within the edge nodes, so that intermediate data traffic is eliminated. Only critical updates or task requests need to involve the main controller when edge devices are more autonomous. As an example, sensor data often needs to be processed to see if it is within the allowed range. If each measurement was sent to the central controller it would generate significant traffic and would require additional processing power by the controller. If the sensor can do the processing locally and then only report to the controller if the readings are out of bounds (or going in that direction), significant central controller data-transfer bandwidth and processing power can be saved.

For complex sensing algorithms, multiple data streams may need to be combined and processed to see if action must be taken by the central controller. For example, imaging information along with speed and distance measurements may show that an object is in the way of the current movement task. If these readings can be combined, perhaps using a decentralized local controller that has access to several key edge sensors, an alert can be sent to the central controller and a decision made on how to react.

Often these complex functions require advanced signal-processing capabilities that are now available in even relatively low-end MCUs. As an example, in the Texas Instruments MSP430 MCU Family, even many of the low-end devices have a hardware multiple-and-accumulate (MAC) function. This capability facilitates simple digital signal processing (DSP) algorithms that are often required when combining multiple-sensor readings, called sensor fusion, for intelligent and autonomous operation. Many MCUs offer even high-performance DSP capabilities, often used for more complex tasks such as imaging systems. The simple MAC is sufficient for a wide range of low-level tasks and can often significantly improve power efficiency over implementations that use more complex devices.

Command

Once the main controller accesses all the smart communications information from sensors and intermediate controllers, it needs to make decisions on the next task. If, for example, an autonomous robot is looking for survivors buried in rubble after an earthquake, and its infrared sensors detect heat, the controller needs to decide what to do. Should it investigate further? Should it first sense the environment for structural integrity? Does it need to get closer to determine if the heat signature is a person? Should it “ask” a human supervisor to weigh-in on the next step? These questions all need to be processed by the controller before the next command is determined.

In many cases more information might be needed since the edge nodes may only be sending an alert without the data behind the reasoning for the alert. If significant processing is required which is more than the edge nodes can handle, the central processor will need to do substantial computational “heavy lifting.” A power-efficient, high-performance processor is a good choice for small autonomous robots running on battery power. The main controller also needs to interface to a wide variety of communications channels for the various edge nodes and intermediate controllers. High-speed interfaces, like Ethernet and USB, are needed for the intermediate controllers. Similarly, lower-speed interfaces, like SPI and UART, are needed for the lower-speed sensors.

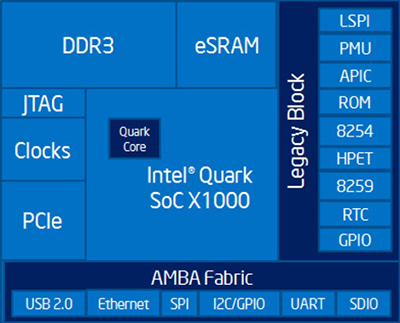

A new generation of efficient embedded processors has the features required for these new applications. For example, the new Intel Quark SoC X10xx processor has a power-efficient CPU core as well as multiple communications interfaces, including Ethernet, USB, PCIe 2.0, SPI, I2C and UART. Access to off-chip memory, in the form of high-capacity DDR or lower-capacity but faster SRAM, is supported by embedded memory controller blocks. For high-reliability applications, an error-correcting code (ECC) memory can find and fix memory errors automatically. Advanced security features improve resistance to malicious intrusions, a growing concern as embedded systems are facing increasing attacks by organized hackers. The Quark X10xx family even has members with a secure boot capability that detects attempts to tamper with the start-up boot code, one of the most aggressive and effective methods for hacking into embedded networks.

Figure 2: Intel Quark SoC X1000 Functional Block Diagram. (Source: Intel)

Control

At some point in the distributed robotics system, electrical signals need to be translated into mechanical movement. The mechanical action may involve moving a heavy chassis at high speeds (and stopping at precisely the right place) or manipulating a mechanical “hand” to precisely grasp and lift a small object. In either case a motor is probably involved in translating the electrical signals into the required mechanical motion. The design of a wide range of motors has become much similar in recent years as MCU manufacturers have accelerated their support for motor-control applications.

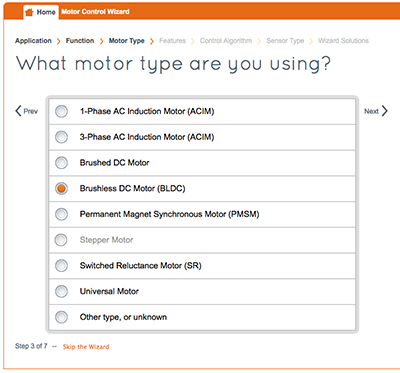

MCUs have been used in motor control for years, and as new algorithms have evolved to improve efficiency, increase reliability, reduce wear and extend operating lifetime, MCUs have had to continually add new capabilities to keep up with these changes. For example, improved processing capabilities, including digital-signal processing and floating point, can now offload data pre-processing tasks from the main CPU. Additionally, hardware timers can implement the low-level tasks of shaping currents and voltages used for control algorithms, further freeing up the main CPU and improving system efficiency. MCU manufacturers have also expanded their software offerings to include specialized tools and proven code to simplify motor-control implementations. Some of the most advanced offerings allow control algorithms to be configured by the designer, turning a complicated design process into using an easy, guided “wizard” to create the required application code.

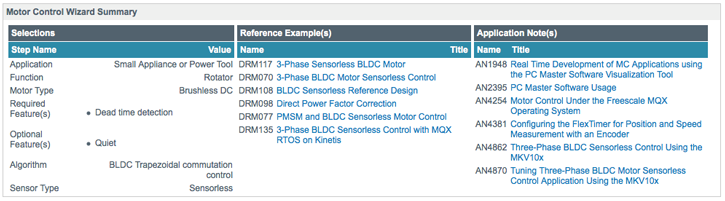

Reference designs that help evaluate and design motor-control applications further simplify the development process. The Freescale Kinetics MCU family, for example, has multiple motor control reference designs for a variety of applications. To help designers quickly navigate through the many possible choices, Freescale has created a web-based solution advisor to help narrow down the mix of features and capabilities needed for various target applications. As shown at the top of Figure 3, the Freescale advisor walks designers through a series of questions about applications, functions, motor types, features, control algorithms and sensor types, before producing a report showing the devices, development boards and reference designs applicable to a specific design. After selections are captured, a report is generated showing the relevant reference examples and application notes for the designer’s specific design requirements.

Figure 3A& 3B: Motor Control Solution Advisor helps design engineers find the right reference designs for their applications. (Source:Freescale Semiconductor)

Summary

Now that you know the three “C’s” of robotics, you can apply these elements to any embedded design, even if it is not a robotics application. Distributed sensing with optimized communications, efficient and intelligent commands for task execution, and intelligent control of the electromechanical interface, are all excellent organizing principles for any complex embedded design. Just make sure you don't allow your embedded system to violate any of Asimov’s three laws of robotics. That could be a real problem.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product